Fulltext technology

Overview

The following paragraphs give a brief description of actions performed after you enter a query into the search box.

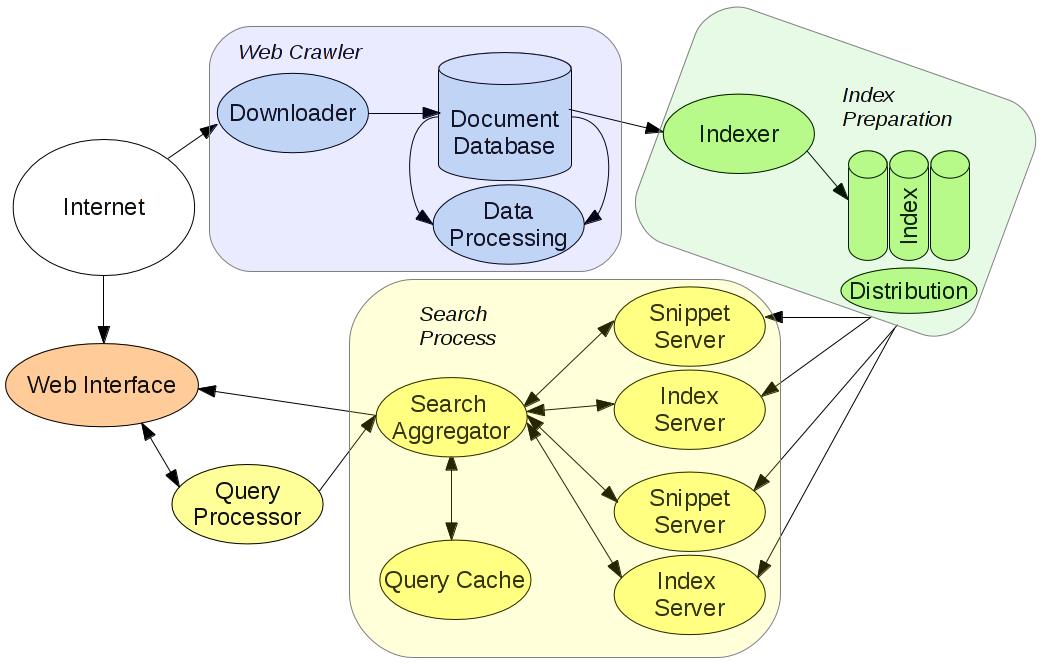

The phrase is processed by the web interface and query processor first, e.g. spell check is performed, possible diacritics is used, etc. The processed phrase (query) is sent to search aggregators and then to index servers. The index server searches for relevant documents in Index and returns the most relevant results to a search aggregator. The search aggregator selects 10 most relevant results and sends a request to a snippet server, which adds a web page title and content (snippets) to the 10 web pages. The web pages are then returned as a result to your query. All these actions are performed online.

Data are downloaded from the Internet by a web crawler, then saved to a document database. Documents are indexed and stored in Index. Update of the document database is performed on a regular basis (weekly, daily and every few minutes).

The following diagram shows a data flow:

Following sections give more detailed description of all components.

1. Query Processor

The query processor processes the phrase you entered. Spell check is performed, abbreviations are expanded, possible synonyms and diacritics are added to a query sent to the search aggregator. Such implementation allows for reception of a wider range of relevant results and returns relevant results even if the phrase is entered incorrectly.

The query processor uses a set of jobs, which modifies the entered phrase in the following way:

- searches for two word collocations

- determines topics of the phrase

- performs expansion of abbreviations or creates abbreviations

- splits or creates composite words

- identifies whether the user entered an exact phrase

- creates related words

- creates alternative notation of numbers or abbreviations

- identifies words in the searched phrase which can be omitted during the search process

- searches for a comma and splits the searched phrase

- searches for a domain name structure in the searched phrase

- converts numbers to their token representation or to text

- expands ligatures

- merges tokens separated with a + or & character

2. Search Aggregator

The search aggregator receives a query from the query processor and sends a request to a query cache to check whether similar query was not performed earlier.

The query cache stores the last relevant result ready to be displayed to the user. These stored results already contain snippets.

If the query cache does not contain proper results, the query is sent to the index server. After the query is processed by the index server, 10 most relevant results are returned to the search aggregator.

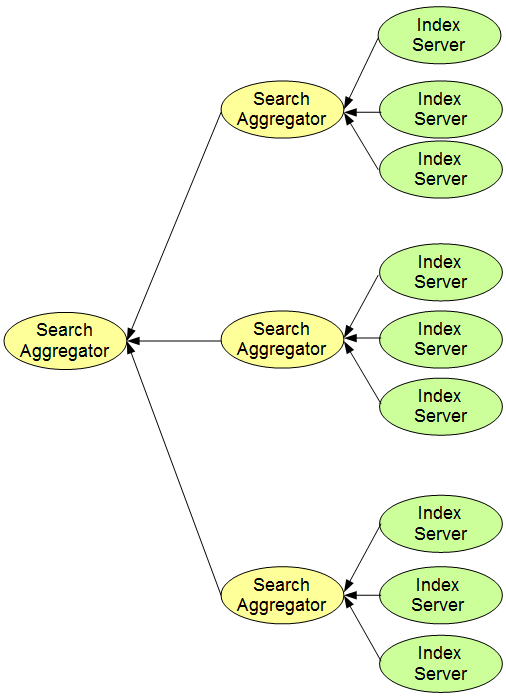

Search aggregators have a tree structure. Each search aggregator receives results from several index servers and selects 10 best results. Another search aggregator is connected to these search aggregators and performs a selection of 10 most suitable results from the search aggregators – see the figure above.

The last search aggregator in the tree sends a request to a snippet server to receive snippets. The 10 results are then sent directly to the web interface.

3. Index Server

The index server performs a search in the search database index. There are several (approx. 200) index servers. Each index server performs a search in a different index database. Such implementation leads to different results obtained from different index servers.

If the searched phrase contains more than one word, the result is an intersection. The index server determines whether the result is relevant to the searched phrase, e.g. if the searched phrase is: “white cat”, not only found documents have to contain both words “white” and “cat”, but also the words should be close to each other. If a document contains words next to each other, it is a more relevant result than a document with the same words located at different places of the document.

4. Snippet Server

The snippet server adds all document information – url, page title, content, links, etc. to the 10 results. It also creates snippets – dynamic descriptions and texts which are highlighted in results returned to the web interface.

Even in case the number of snippet servers and index servers is the same, the snippet servers are used only on that server machine where index servers found relevant results. Such implementation decreases traffic on server machines as the snippet server is used rarely (snippet servers handle large amount of data in comparison to index servers).

5. Index

A clustered index database stores indexed documents. Current implementation has the following parts:

- word barrel – stores a list of documents for each known word and also stores a count of occurences (hits) of the specific word in documents

- document barrel – stores a list of all documents in a bundle

- title barrel – stores processed web pages content and metadata

- query site barrel – stores how many unique queries performed from one site contained a specific word

- site barrel – stores a list of documents for sites

- link barrel – stores hyperlinks pointing to web pages

- qds barrel – stores signal values of feedback rank for a specific query

- queryurl barrel – stores information on which unique queries lead to a specific url

6. Indexer-worker

The main purpose of the indexer-worker is to prepare documents from the document database to be stored in the index.

The indexer-worker performs the following actions upon documents:

- determines whether a document is in the HTML format

- determines a language

- checks whether a page is not spam

The indexer-worker splits a document into single words and performs inverse indexing – each word receives a list of documents where it is located.

7. Web Crawler

A web crawler and its subcomponent fresh web crawler are components that regularly download web pages (documents) and perform analysis of the downloaded web pages. While the web crawler downloads all web pages daily, the fresh web crawler is designed to download rapidly changing pages like newspaper articles in very short time intervals.

The web crawler comprises:

- A document database – a clustered database that stores all documents along with the content of documents, ranks, calculated statistics, incoming links, etc.

- Hkeeper – is a core part of the web crawler as it is responsible for planning of all downloading, determines in which states documents are, manages deleting and creating of documents and prepares documents to be downloaded for the fresh web crawler.

- LinkRevert – is a component that performs analysis of incoming links – determines a language and a topic of the incoming links, which may help to determine the language or topic of the target document even if the document has not been downloaded yet.

- Indexfeeder – selects documents to be indexed as only some documents are of such quality as to be used in the search process. The Indexfeeder distributes selected documents to folders further used by the indexer-worker.

- Downloaders – regularly browse the Internet and download web pages.

- Download manager – manages downloaders.

The fresh web crawler is a “simplified” analogy of the web crawler with slightly different components:

- LinkFeeder – is used to parse the downloaded RSS and HTML feeds

- Fresh database – stores data needed to plan feeds.

Downloaders and download managers are the same, but there is no Hkeeper – the fresh web crawler uses data daily exported from the document database.