History of web search

Let’s take a brief look at the history of Seznam.cz’s web search:

- Seznam.cz is a Czech company founded in 1996. Back in those days our key product was a web catalogue, which could have been regarded as an industry-standard back then. Apart from that we offered news and top sites lists.

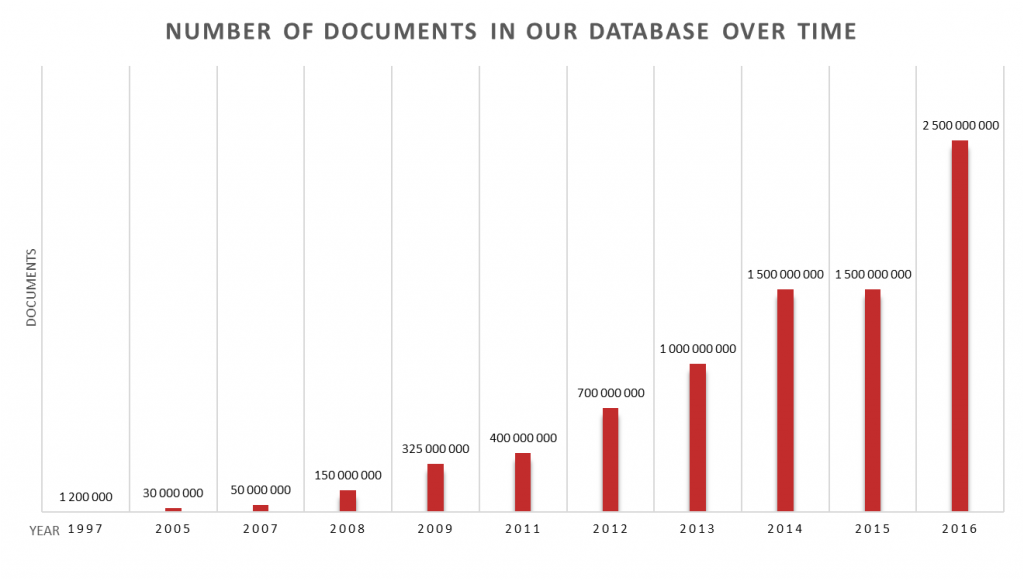

- In 1997 we started our own full-text search engine, Kompas (“Compass”), searching through 1.2 million pages. Kompas enabled users to enter queries including accents for the first time.

- In the next few years we continually worked on improving our services. We shortly tried using Google Search in 2002, but decided not to take this path.

Google wasn’t the only option – we also tried Empyreum and Jyxo, but eventually we decided to develop our own search engine in 2005. Our database was as large as 30 million Czech documents and the service, created by just 4 people, ran on 14 servers.

Google wasn’t the only option – we also tried Empyreum and Jyxo, but eventually we decided to develop our own search engine in 2005. Our database was as large as 30 million Czech documents and the service, created by just 4 people, ran on 14 servers.

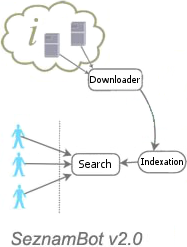

- To make the web indexing faster and better, we updated our crawler to a new version – called SeznamBot/2.0 – in 2007. The architecture became scalable at the time and was based on multiple MySQL databases.

- In 2008 we introduced blind friendly search engine results page (SERP) and we started indexing non-HTML documents such as PDF or DOC files.

In 2009 we started using Microsoft Bing for searching foreign pages. We also improved user intent recognition a lot, which resulted in a giant leap in search results relevance. Thanks to all of this, we received the Křišťálová Lupa award in the Search engines and databases category. We also updated our screenshot generator, which provides our search results with thumbnails.

In 2009 we started using Microsoft Bing for searching foreign pages. We also improved user intent recognition a lot, which resulted in a giant leap in search results relevance. Thanks to all of this, we received the Křišťálová Lupa award in the Search engines and databases category. We also updated our screenshot generator, which provides our search results with thumbnails.

- As the number of documents and features increased, the search process became more and more demanding. In 2010 the search spun over more than 100 servers where a significant part of this count is occupied by the crawler.

Another updated version of our crawler introduced in 2011 – SeznamBot/3.0 – brought a great change in the choice of technology as it uses Hadoop instead of MySQL. We started evaluating documents in our index – only the documents that we consider the best are eligible for displaying in search results. We were also experimenting with Yandex technologies, developed beta version of video search and started searching for freetime activities.

Another updated version of our crawler introduced in 2011 – SeznamBot/3.0 – brought a great change in the choice of technology as it uses Hadoop instead of MySQL. We started evaluating documents in our index – only the documents that we consider the best are eligible for displaying in search results. We were also experimenting with Yandex technologies, developed beta version of video search and started searching for freetime activities.

- Starting with 2012 we began massively indexing foreign pages, so the number of documents we’re looking through raised from 400 million to approximately 700 million (the number of documents that our crawler knows of is actually much higher, but as we have already said, only the best of them make it to the search results).

- Tablets and smartphones are constantly becoming more and more popular. As a result, back in 2013 we have switched our web search to a responsive layout, which adapts to the capabilities of the device. Later that year we started to publish trending search queries on our Twitter channel.

- In 2014 we enlarged the thumbnails of results displayed on the search engine results page (SERP) and we added sitelinks to certain organic results, mostly for navigation queries. We started to detect users’ location and to display tailored hints and query suggestions in case of a success. We also experimented with a matrix layout of the SERP instead of the traditional single-column one. However, after a series of user testing sessions, we decided to dismiss that idea and focus on the traditional layout.

- The year 2015 brought many changes for us. We moved a part of our web crawler to a new data centre named Kokura, we improved indexing HTTPS websites, we upgraded the language detection, we have launched a brand new Freshbot to crawl websites and RSS channels faster, we upgraded our Hadoop cluster to a major new version and we introduced our own image and video search solution to replace unsatisfactory licensed technology powered by PicSearch. We also entered the environment of smartphones and tablets using our own web browser app. Finally, we reinforced our efforts in fighting web spam, which resulted in a big update of our anti-spam solution called Jalapeño.

- In 2016 we continued to fight with spam sites in the search results by deploying Jalapeño 2.0 and later Jalapeño 3.0. We started to focus more on the quality of the web sites in our search results, which led to the release of another major update called Page Quality. Furthermore, we have introduced a new search operator “info:“, which enables page owners to verify that their sites are indexed correctly. We have accelerated downloading and indexing of videos and we deployed improved and also much faster version of Freshbot called Mach II, which can handle twice as many resources as the original Freshbot. We have also increased the size of the web crawler database which allowed us to refine the planning of crawling the websites. Hand in hand with enlarging the web crawler database we also increased the number of documents we actually index and search through.

We are consistently developing and improving our search engine in an endless effort to provide users with what they are looking for.

More about Seznam.cz company history